Downloading images from multiple websites manually is time-consuming and inefficient. Whether you need 50 images or 5,000, doing this one-by-one quickly becomes impractical for any serious project.

This comprehensive guide walks you through automating bulk image downloads using our Bulk Image Downloader – a tool that handles everything from single images to thousands of URLs in one operation.

Why Bulk Image Downloading Matters

Before diving into the how-to, let's explore why businesses across industries are automating their image collection processes:

E-commerce & Retail: Backup product catalogs before website updates, create offline product galleries, and organize image assets for inventory management systems.

Digital Marketing Agencies: Create comprehensive visual asset backups for client websites, build organized libraries for campaign materials, and maintain archives of marketing visuals across multiple projects.

Academic Institutions: Create visual datasets for research projects, archive institutional digital assets, and support projects requiring organized image collections from educational websites.

Web Development Teams: Backup website assets before migrations, create offline copies of visual content, and maintain organized asset libraries for development projects.

Getting Started: The Interface Walkthrough

Setting Up Your Image Sources

When you first access our Bulk Image Downloader, you'll land on a clean, intuitive interface designed for both technical and non-technical users.

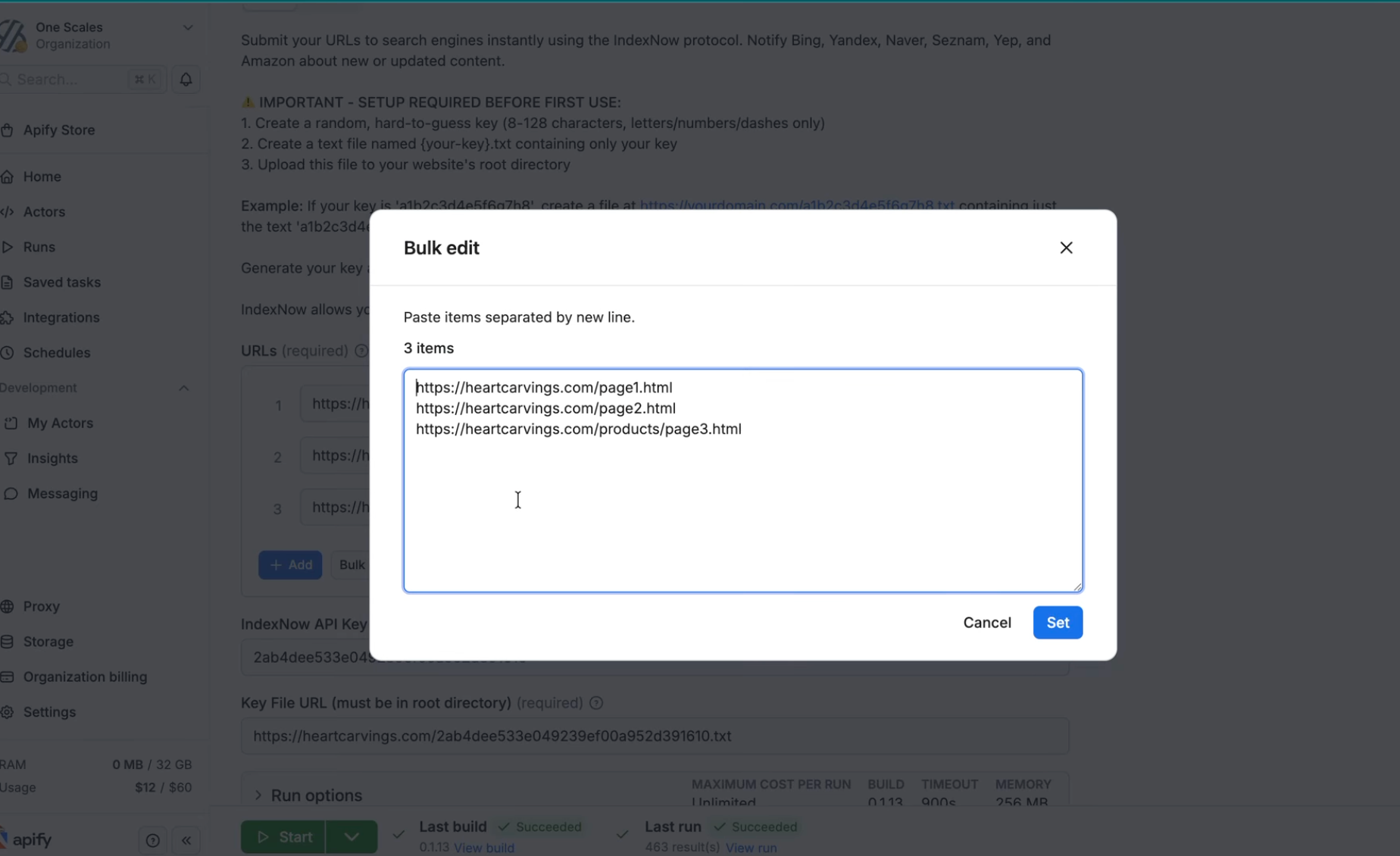

The Start URLs Section is your command center. Here, you can input two types of sources:

-

Complete webpages (like

https://store.example.com/categories/shoes) to extract every image found on that page -

Direct image URLs (like

https://cdn.example.com/product-photo.jpg) for targeted downloads

The interface allows you to add URLs one by one using the "Add" button, or you can bulk-paste multiple URLs if you're working with a large list from a spreadsheet or database.

Configuring Your Download Strategy

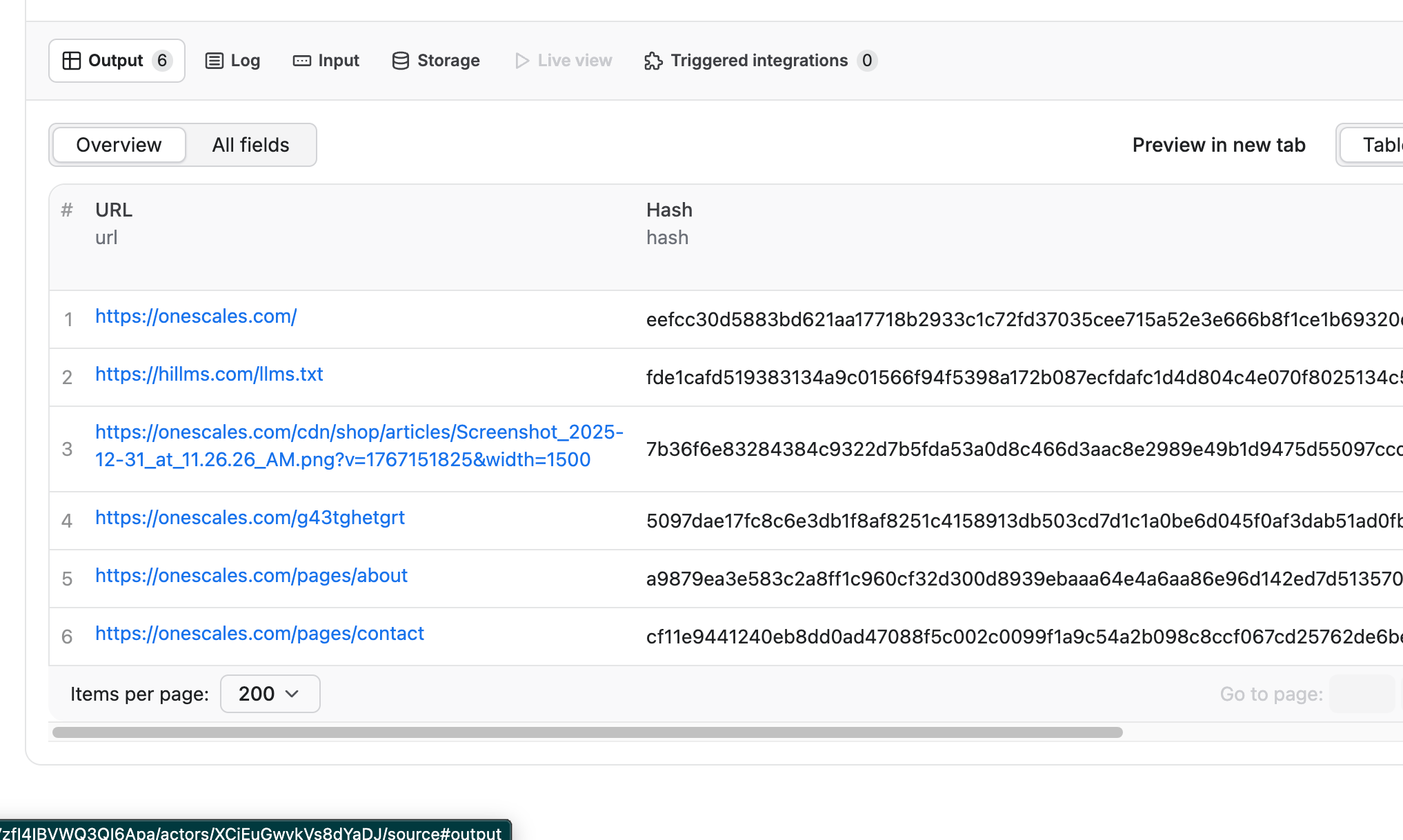

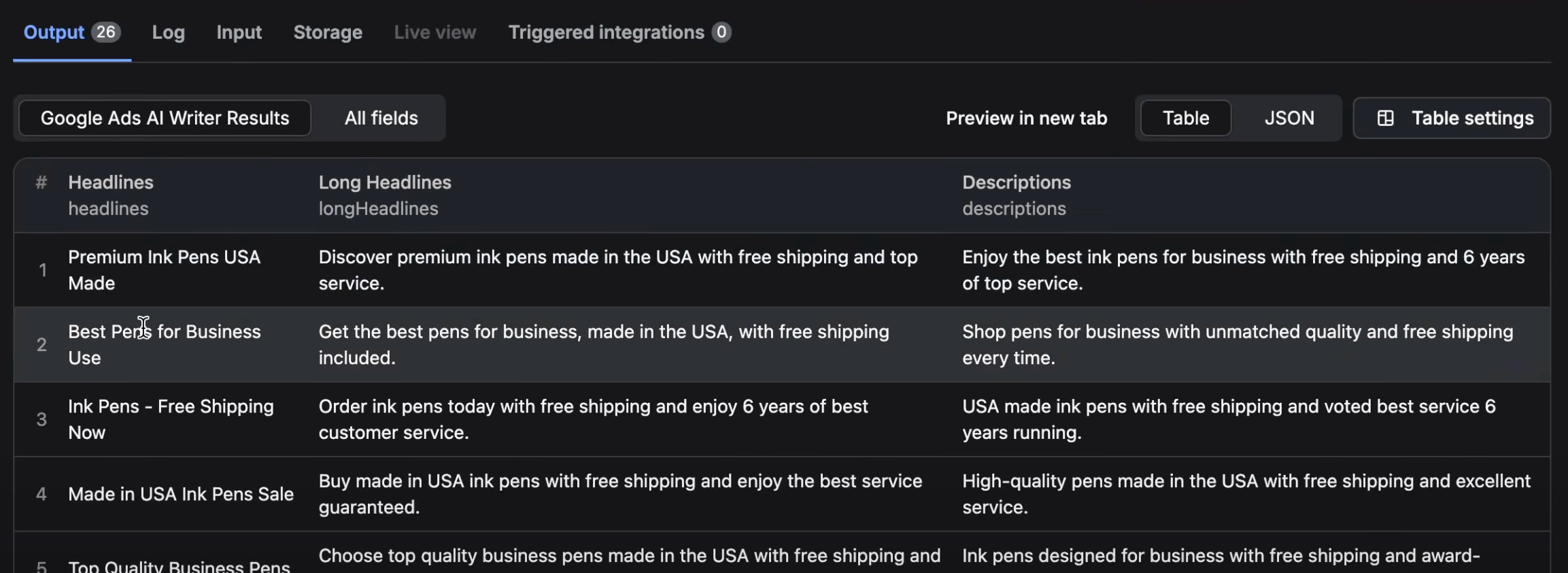

Output Format Selection is where you decide how you want your results organized:

- Detailed List: Perfect for analysis work. You'll get a comprehensive breakdown of each image including source URL, file size, format, and even thumbnail previews. This format is ideal when you need to audit what you've collected or make selective choices about which images to use.

- Single Archive: Combines all images from all sources into one downloadable ZIP file. Great for simple backup purposes or when you want everything in one place for offline browsing.

- Organized Archives: Creates separate ZIP files for each website you're processing. This is the sweet spot for most professional use cases – it keeps your downloads organized while still being efficient.

Choosing Your Crawling Method

The Crawler Type setting determines how thoroughly the tool scans for images:

Fast HTML Scanning works by quickly parsing the page's HTML code to find image references. This method is lightning-fast and works perfectly for most websites, especially those with traditional image implementations.

Full Browser Simulation loads pages just like a human visitor would, waiting for JavaScript to execute and dynamic content to load. This slower method is essential for modern web apps, Instagram-like feeds, or any site where images load as you scroll.

Fine-Tuning Your Collection

Responsive Image Inclusion is a powerful feature that many users overlook. Modern websites serve different image sizes for different devices – your phone gets smaller images while your desktop gets high-resolution versions. Enabling this option ensures you capture the highest quality versions available.

Processing Limits help you control scope and costs. Setting a maximum number of pages prevents runaway processes and helps you estimate completion times. Start with smaller limits for testing, then scale up for production runs.

Proxy Configuration is your shield against website blocking. When enabled, your requests appear to come from different locations and IP addresses, reducing the likelihood of being rate-limited or blocked entirely.

Real-World Application Examples

Case Study 1: E-commerce Asset Management

An online retailer needed to backup their entire product image catalog before a major website redesign. Using our tool, they:

- Listed all product category pages from their website as start URLs

- Selected "ZIP per URL" to keep each category's images separate

- Enabled responsive image collection to capture all image variants

- Set appropriate processing limits to organize the backup efficiently

Result: 2,847 product images organized by category, creating a comprehensive backup that served as both archive and reference during the redesign process.

Case Study 2: Academic Digital Archive

A university library needed to create an organized archive of images from their digital collections website:

- Used the "List" output format to capture detailed metadata for cataloging

- Employed the slower crawler to capture dynamically-loaded collection images

- Set modest processing limits to efficiently organize the download process

- Used proxy configuration to ensure reliable access during large-scale collection

Result: A dataset of 5,000+ institutional images with complete metadata, enabling proper digital asset management and research access.

Case Study 3: Website Migration Preparation

A digital agency preparing for a client's website platform migration needed to backup all visual assets:

- Input the entire current site structure as start URLs

- Used "Single Archive" format for comprehensive backup

- Enabled responsive image collection to capture all variants

- Used fast HTML scanning for efficient processing

Result: Complete visual asset backup completed in under an hour, ensuring no images were lost during the platform migration.

Advanced Tips and Strategies

Optimizing for Different Website Types

Static Corporate Sites: Use fast HTML scanning with standard settings. These sites typically have straightforward image implementations that don't require browser simulation.

E-commerce Platforms: Enable responsive image collection and consider using browser simulation if product images load dynamically. Many modern e-commerce sites lazy-load images as users scroll.

Content Management Sites: Often require browser simulation due to dynamic loading implementations. Set appropriate processing limits as these sites can have extensive image catalogs.

Portfolio and Gallery Sites: Often benefit from responsive image collection to capture high-resolution versions. Consider the slower crawler if galleries use JavaScript-based slideshow implementations.

Managing Large-Scale Operations

When processing hundreds or thousands of URLs, consider these strategies:

Batch Processing: Instead of processing 1,000 URLs at once, break them into batches of 100-200. This makes monitoring easier and reduces the risk of losing progress if issues occur.

Quality over Quantity: Use processing limits strategically. It's often better to get the top 50 images from each of 100 sites than to get every image from 10 sites.

Progressive Testing: Start with a small sample to understand what types and quantities of images you'll collect, then scale up with confidence.

Troubleshooting Common Challenges

When Images Aren't Being Found

If your results are coming back empty or with fewer images than expected:

- Switch to browser simulation – Many modern sites load images via JavaScript

- Check for authentication requirements – Some sites require login to view images

- Verify URL accessibility – Ensure the pages are publicly accessible

- Enable responsive image collection – Some images might only exist in srcset attributes

Handling Download Failures

When downloads fail or seem incomplete:

- Enable proxy configuration – This resolves most blocking issues

- Reduce processing speed – Lower your max items limit to avoid overwhelming target servers

- Check file size limits – Some images might be too large for processing

- Verify image formats – Ensure the files are actually images and not other file types

Managing Large File Outputs

When your ZIP files are becoming unwieldy:

- Use "ZIP per URL" format – This creates smaller, more manageable archives

- Disable responsive images temporarily – This reduces file sizes significantly

- Process smaller batches – Break large jobs into multiple smaller runs

- Filter source URLs – Remove URLs likely to have many images if you don't need comprehensive collection

Best Practices for Professional Use

Ethical Considerations

Always respect website terms of service and copyright laws. Our tool is designed for legitimate business uses like asset management, website backups, academic archiving, and organizational image collection – ensuring proper stewardship of digital assets.

Performance Optimization

- Start every project with a small test run (5-10 URLs) to understand the scope and output

- Use the fastest crawler type that meets your needs

- Enable proxy configuration for any large-scale operation

- Set reasonable processing limits to avoid overwhelming target websites

Organization and Workflow

- Use descriptive naming conventions for your download batches

- Keep a log of which settings work best for different types of websites

- Consider your end-use when choosing output formats

- Plan for post-processing – how will you organize and use the collected images?

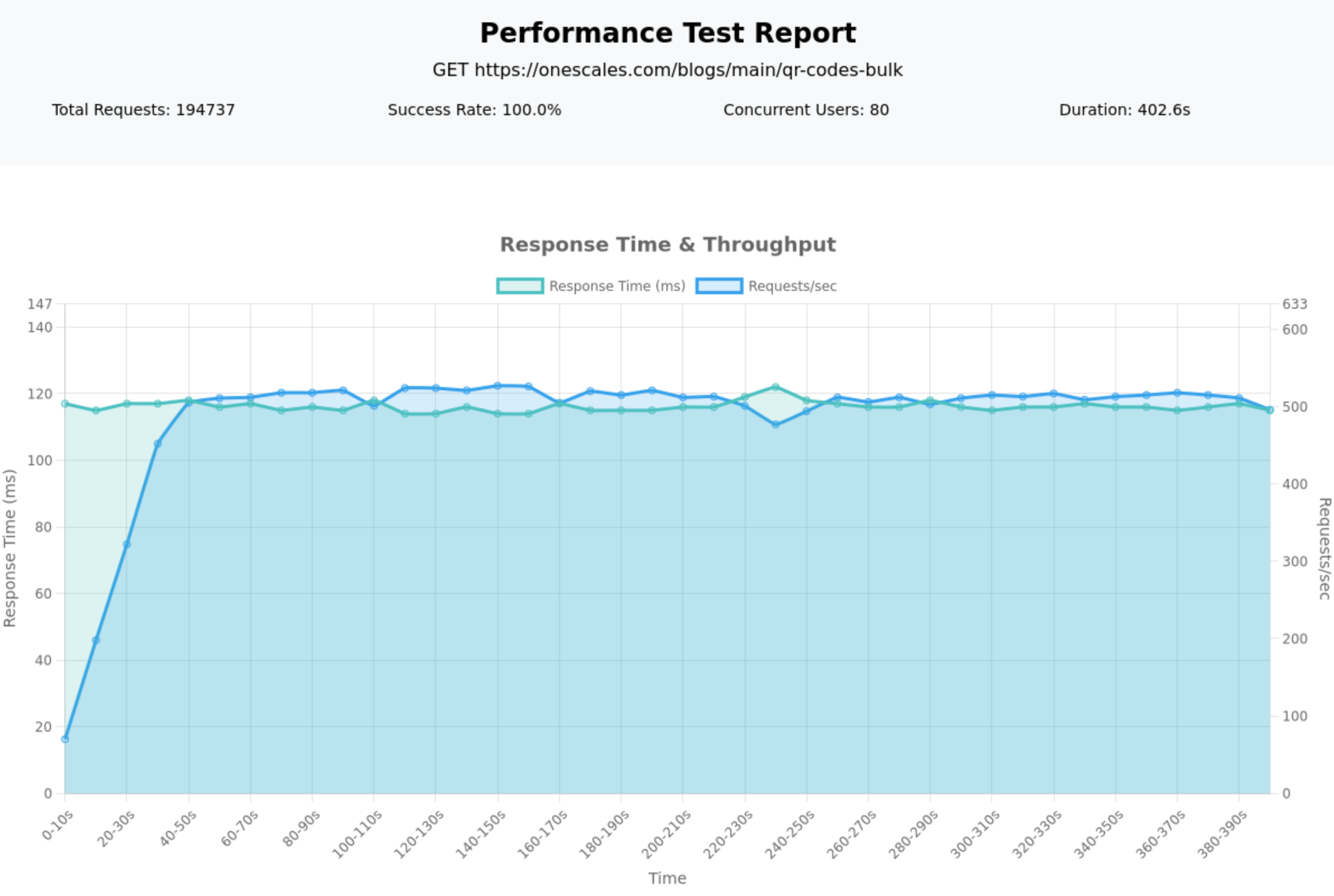

Measuring Success

The true value of automation becomes clear when you consider the alternatives. Manual image downloading typically takes 30-60 seconds per image when you factor in navigation, right-clicking, saving, and organizing. Our tool can process hundreds of images per minute while maintaining perfect organization and providing detailed metadata you'd never capture manually.

Time Savings: What used to take days now takes hours Accuracy: No more missed images or broken links Organization: Automatic file naming and metadata capture Scalability: Handle projects of any size with the same effort Consistency: Repeatable processes that work the same way every time

Getting Started Today

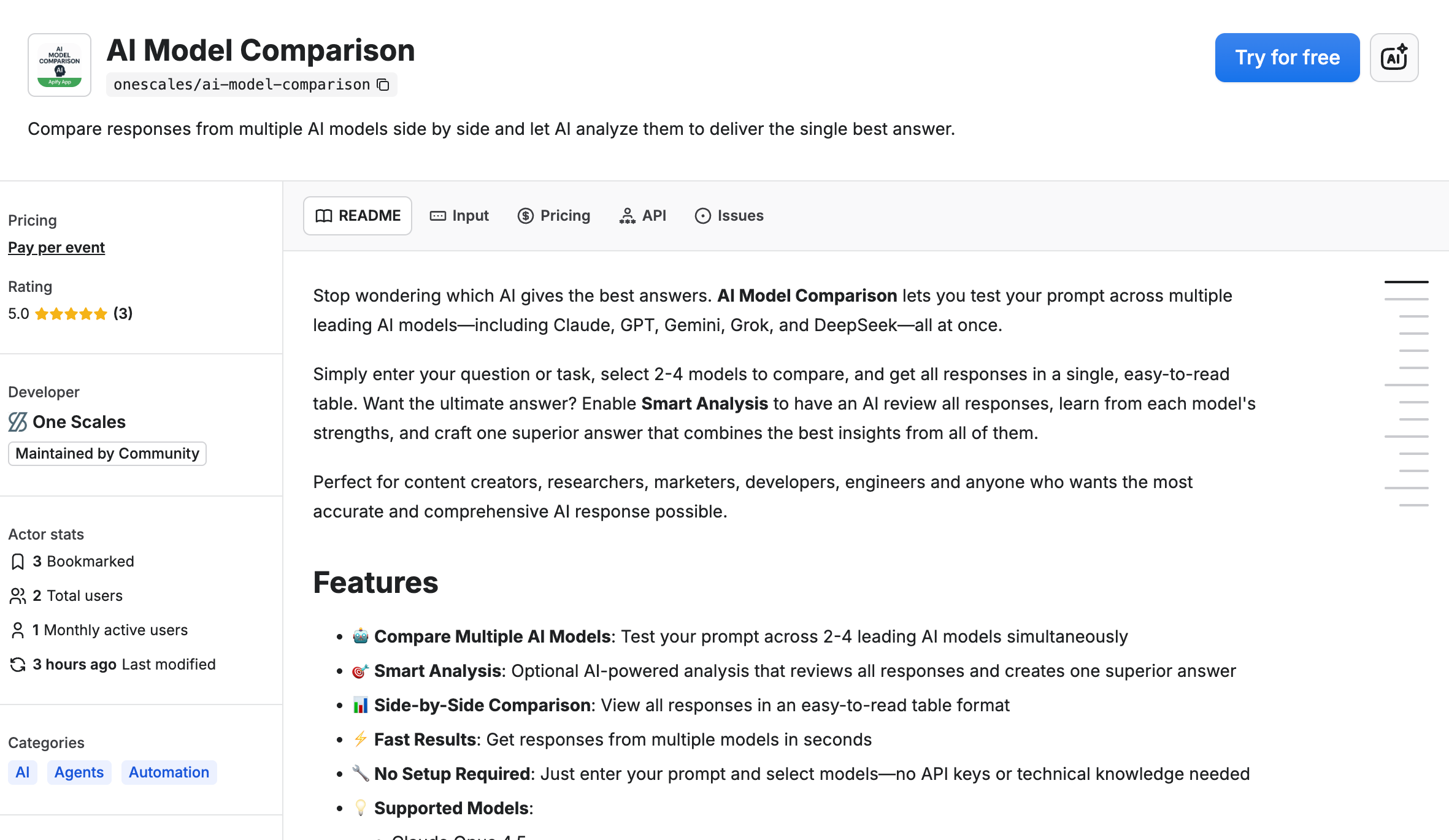

Ready to transform your image collection workflow? The Bulk Image Downloader is available now on the Apify platform. Start with a small test project to familiarize yourself with the interface, then scale up to handle your most ambitious image collection challenges.

Whether you're conducting competitive research, building asset libraries, or managing website migrations, automating your image collection process isn't just about saving time – it's about enabling work that wouldn't be practical to do manually. The insights and efficiencies you'll gain will quickly justify the move from manual to automated processes.

The future of data collection is automated, organized, and accessible. Your image collection workflow should be too.